Multi-Class Classification

The Probabilistic Interpretation

- models the probability

- And thus

- …or, more copactly

Maximizing the Data Likelihood

- How to measure the likelihood of the observed data?

- Assume: are independently generated

- The probability of getting :

- (Derived from definition of conditional probability)

- Note that are constants determined by the data distribution (so, we do not need to model it)

Maximizing the Data Likelihood

- Maximum likelihood:

- How can we tailor this objective function for logistic regression?

Tailoring

Softmax Function

Given output logits , the softmax function will return output probabilities (in a matrix).

Softmax vs. Sigmoid

The softmax function is equivalent to the Sigmoid function in the binary case.

Training Objective for Multi-Class Classification

- Maximum likelihood:

- This is equivalent to cross entropy (often used in pytorch)

- Cross entropy between distributions P and Q:

- Given an estimated distribution (returned from Softmax function) and empirical distribution, we can use the cross entropy of the two distributions to determine the loss ( it will return ). The cross entropy of the two should equal the maximum likelihood.

SGD with Cross Entropy Loss

Loss Function:

Gradient w.r.t : Gradient w.r.t :

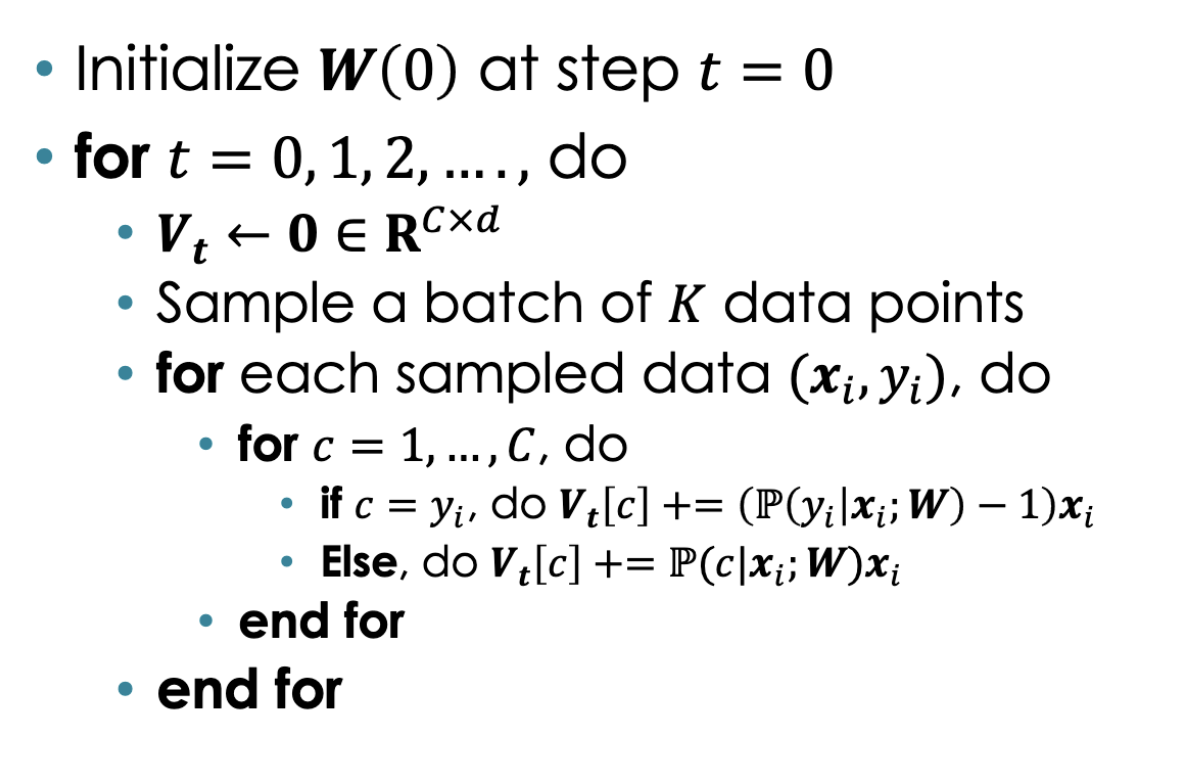

Pseudocode Initialization: